Unveiling Mithril

Unveiling Mithril

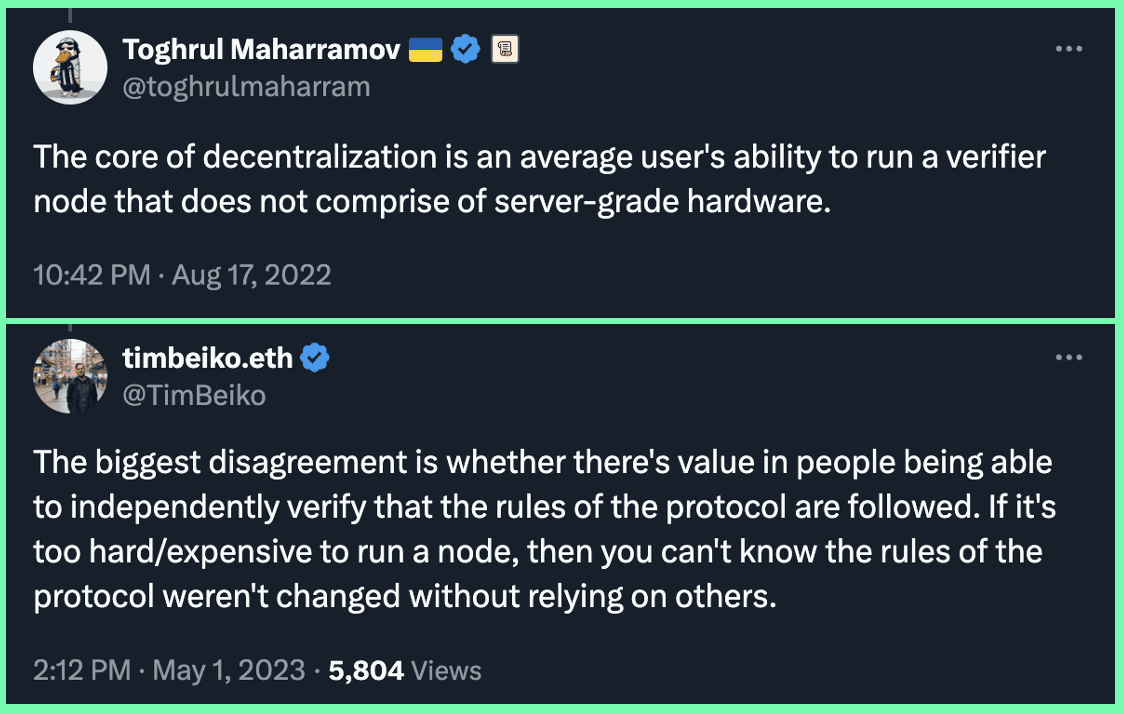

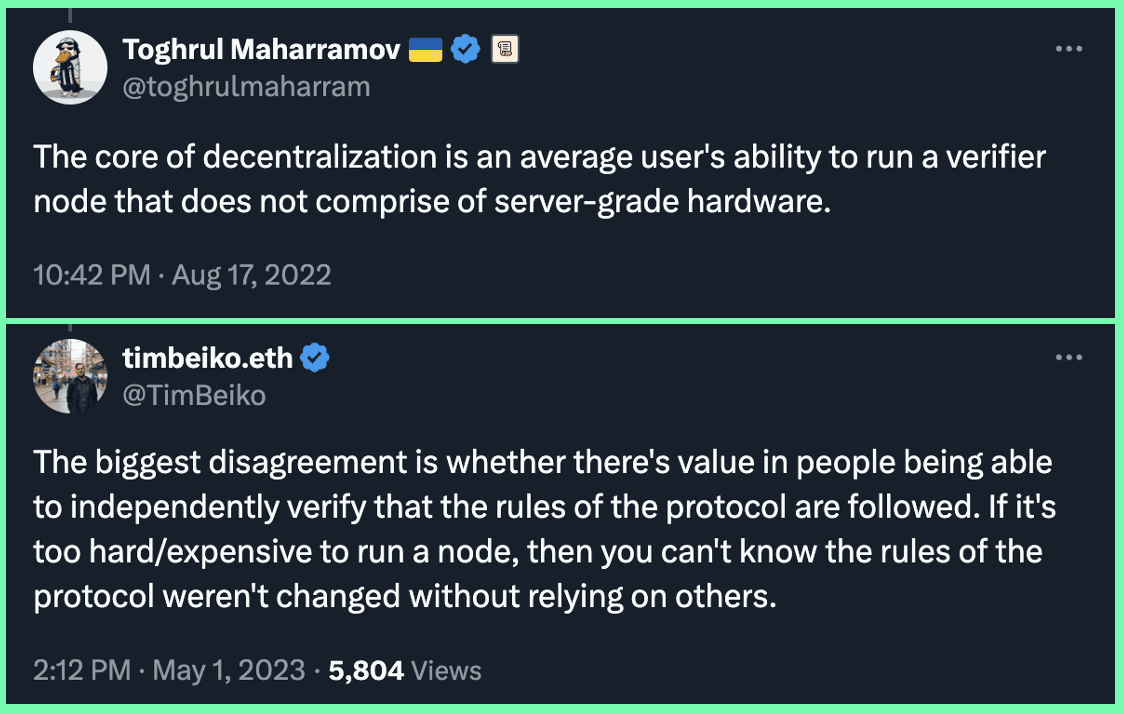

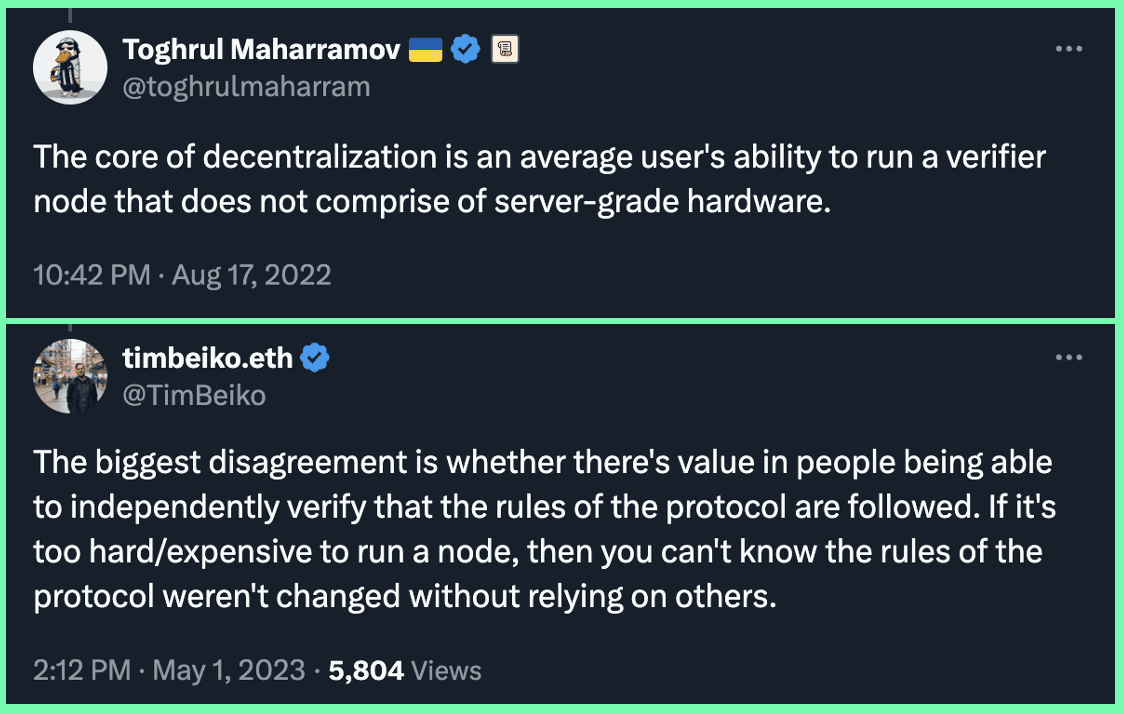

Solana has long been criticized for its relatively expensive and complex system requirements to run a full node, normally requiring a connection to a datacenter server to fully verify the chain in most areas of the world. Supporters of competing blockchains (i.e. Ethereum ecosystem) have correctly pointed to these barriers to verifying Solana as one of its largest weaknesses in terms of decentralization.

Solana has long been criticized for its relatively expensive and complex system requirements to run a full node, normally requiring a connection to a datacenter server to fully verify the chain in most areas of the world. Supporters of competing blockchains (i.e. Ethereum ecosystem) have correctly pointed to these barriers to verifying Solana as one of its largest weaknesses in terms of decentralization.

Solana has long been criticized for its relatively expensive and complex system requirements to run a full node, normally requiring a connection to a datacenter server to fully verify the chain in most areas of the world. Supporters of competing blockchains (i.e. Ethereum ecosystem) have correctly pointed to these barriers to verifying Solana as one of its largest weaknesses in terms of decentralization.

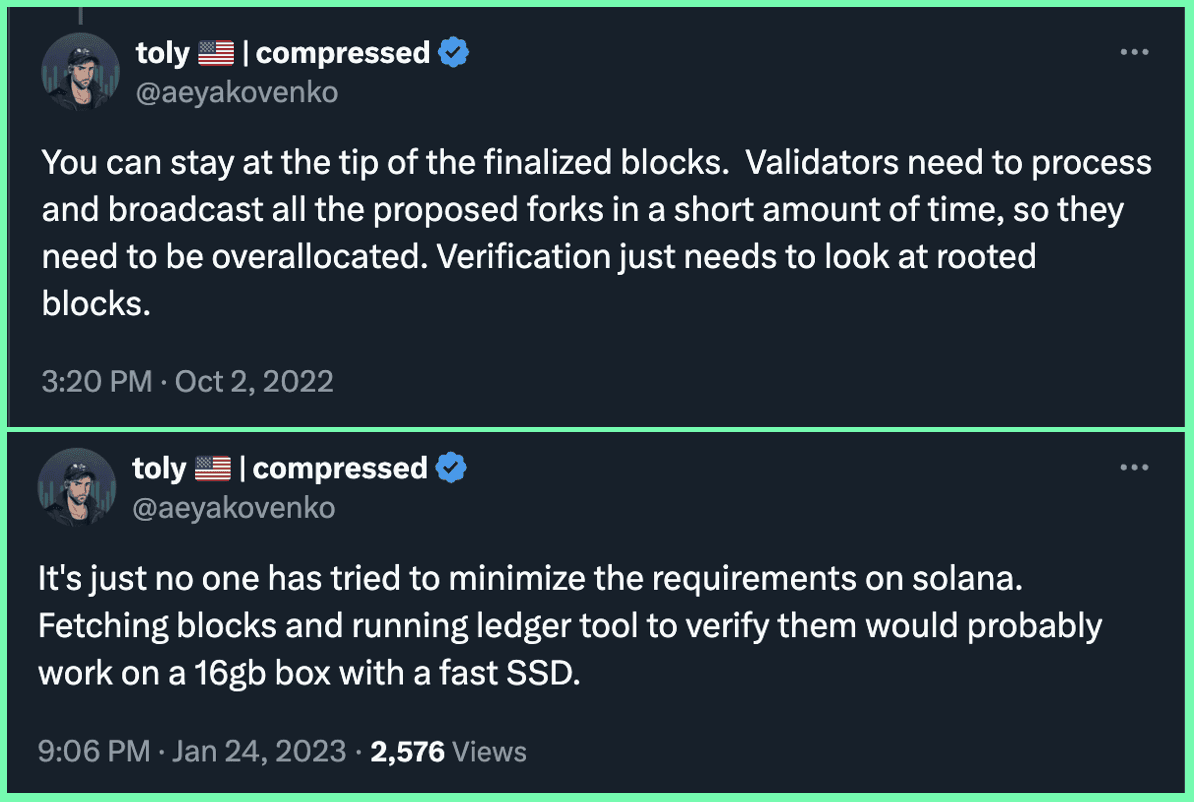

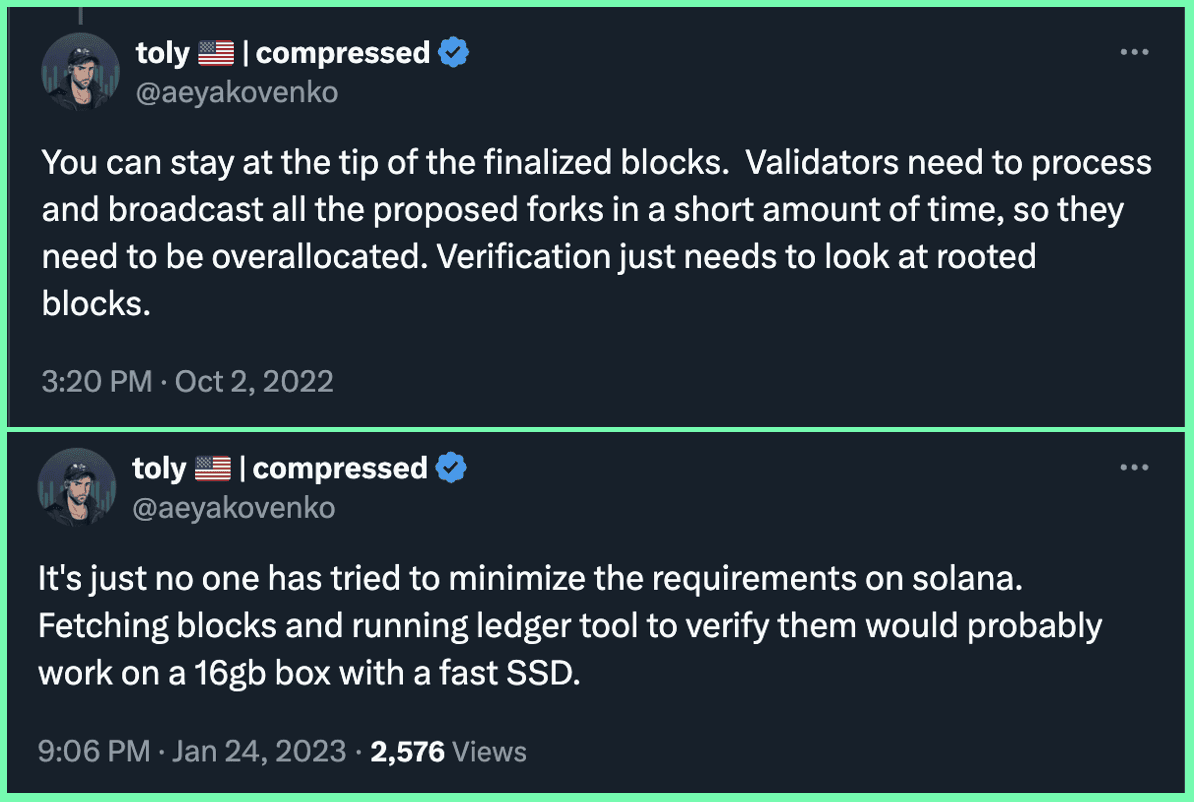

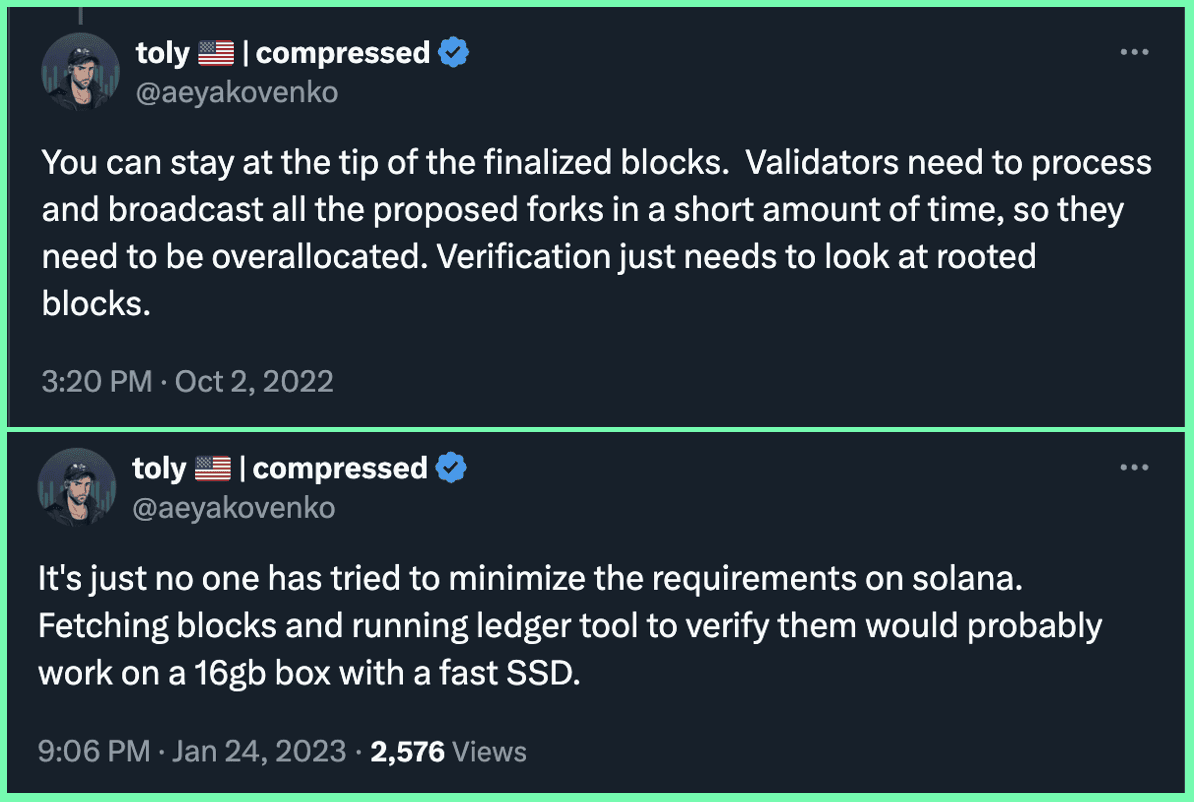

Fortunately, Solana can be made significantly easier to verify by removing some of the heavier functionality required for validator and RPC nodes, and designing a streamlined “verifying full node” specification, focused on personal verification of the chain. More importantly, this can be accomplished without modifications to the underlying architecture of Solana, a possibility that has been touched on for a while now by Solana co-founder, Anatoly Yakovenko.

Fortunately, Solana can be made significantly easier to verify by removing some of the heavier functionality required for validator and RPC nodes, and designing a streamlined “verifying full node” specification, focused on personal verification of the chain. More importantly, this can be accomplished without modifications to the underlying architecture of Solana, a possibility that has been touched on for a while now by Solana co-founder, Anatoly Yakovenko.

Fortunately, Solana can be made significantly easier to verify by removing some of the heavier functionality required for validator and RPC nodes, and designing a streamlined “verifying full node” specification, focused on personal verification of the chain. More importantly, this can be accomplished without modifications to the underlying architecture of Solana, a possibility that has been touched on for a while now by Solana co-founder, Anatoly Yakovenko.

Seeing that it was possible to meaningfully improve the decentralization of Solana by developing a more minimal full node type, the Overclock team decided to do so by spearheading development of a new client called Mithril, built to run on more economical, user-friendly consumer hardware or cloud platforms in the popular, performant, and readable language of Go. Mithril is being built on the foundations of an early Solana Golang client called Radiance, and its name is a nod to the light, protective, “radiant” metal of the same name found in The Lord of the Rings.

“Mithril! All folk desired it. It could be beaten like copper, and polished like glass; and the Dwarves could make of it a metal, light and yet harder than tempered steel. Its beauty was like to that of common silver, but the beauty of mithril did not tarnish or grow dim.”

The two overarching goals for Mithril are:

Lowering the barriers to securely access and verify Solana by creating a more streamlined, resource-efficient full node client.

Lowering the barriers to understand the Solana core protocol by developing a more simple, modular codebase in the easy-to-understand programming language, Go

Seeing that it was possible to meaningfully improve the decentralization of Solana by developing a more minimal full node type, the Overclock team decided to do so by spearheading development of a new client called Mithril, built to run on more economical, user-friendly consumer hardware or cloud platforms in the popular, performant, and readable language of Go. Mithril is being built on the foundations of an early Solana Golang client called Radiance, and its name is a nod to the light, protective, “radiant” metal of the same name found in The Lord of the Rings.

“Mithril! All folk desired it. It could be beaten like copper, and polished like glass; and the Dwarves could make of it a metal, light and yet harder than tempered steel. Its beauty was like to that of common silver, but the beauty of mithril did not tarnish or grow dim.”

The two overarching goals for Mithril are:

Lowering the barriers to securely access and verify Solana by creating a more streamlined, resource-efficient full node client.

Lowering the barriers to understand the Solana core protocol by developing a more simple, modular codebase in the easy-to-understand programming language, Go

Seeing that it was possible to meaningfully improve the decentralization of Solana by developing a more minimal full node type, the Overclock team decided to do so by spearheading development of a new client called Mithril, built to run on more economical, user-friendly consumer hardware or cloud platforms in the popular, performant, and readable language of Go. Mithril is being built on the foundations of an early Solana Golang client called Radiance, and its name is a nod to the light, protective, “radiant” metal of the same name found in The Lord of the Rings.

“Mithril! All folk desired it. It could be beaten like copper, and polished like glass; and the Dwarves could make of it a metal, light and yet harder than tempered steel. Its beauty was like to that of common silver, but the beauty of mithril did not tarnish or grow dim.”

The two overarching goals for Mithril are:

Lowering the barriers to securely access and verify Solana by creating a more streamlined, resource-efficient full node client.

Lowering the barriers to understand the Solana core protocol by developing a more simple, modular codebase in the easy-to-understand programming language, Go

Enhancing Security with Full Nodes

Enhancing Security with Full Nodes

Operating a full node provides the most secure method of accessing a blockchain, enabling full observation and verification of the ongoing changes to the chain’s state as new blocks are added. Once a Solana full node client (i.e. Agave on Solana) is initialized on a server, verifying the chain proceeds as follows:

Step 1 - Initial Sync: A recent “snapshot” of Solana’s full state is downloaded from a “trusted” source on the network (e.g. RPCs, validators), which is then unpacked to populate the node's local view of this state into the AccountsDB. This state is in the form of “accounts” that contain data such as users’ token balances. Older snapshots can be downloaded from sources such as Triton’s project Yellow Stone, but syncing from a snapshot at any point in time after Solana’s Genesis carries additional trust assumptions (related context: “weak subjectivity”). While using a recent snapshot to join the network is technically less secure, running a recently synced full node is still the most significant step one can make to securely access Solana blockchain state at this point in time.

Step 2 - Fetch: Once Step 1 is completed and the node has uploaded recent state from a snapshot, it is typically lagging behind the leading edge of the network where new blocks are being produced by several minutes or longer. This leading edge is also known as the "tip" of the chain. To build its local copy of the ledger up to the tip of the chain, it begins fetching new blocks (in shred format) from other validators through Solana’s Repair Service and once closer to the tip, it starts receiving blocks (also shreds) from the network via Turbine. These shreds are received at the node’s Transaction Validation Unit port. In parallel to this block fetching process, the full node is busy verifying (aka “replaying”) these blocks in canonical order, cycling through Steps 3 and 4 where state will be progressed until it catches up to the tip of the chain. Note that for full nodes, blocks do not need to be from trusted sources since each node is capable of fully verifying blocks and executing them. This is because the block will simply be rejected by the full node client if the source decides to be malicious – all consensus and state transition rules are enforced by the full node client software (see Steps 3 and 4). A malicious supermajority of validators can forge non compliant transactions and blocks, and continue on with consensus, but a full node (including non-malicious, honest validators participating in consensus) will be able to detect these types of violations and halt. This is the core of what makes full nodes secure – a malicious source must break fundamental cryptographic security (and consensus trust assumptions) to trick a full node into accepting an invalid state transition or a double spend.

Step 3 - Consensus Verification: Once a full node has synced the initial account state by completing Step 1, it has information such as that epoch’s validator stake weights and leader schedule. Using this information, the node can determine how much stakeweight has voted on a block and if a specific block has achieved fork choice consensus, meaning that the block needs to have at least 67% of the network stake weight voting for its block hash. Valid blocks that go on to achieve a supermajority of consensus votes are said to be "confirmed" and later become “finalized.” This information can be obtained by scanning the contents of subsequent blocks, since Solana votes are notably included as transactions in blocks.

Step 4 - Verification and Execution: During the catch-up process mentioned in Step 2, transactions contained in blocks are validated and executed. Transactions direct state changes to occur and their validation involves performing several checks in accordance with the Solana protocol.

When a transaction is executed, its lifecycle is as follows:

Protocol compliance checks are performed on the transaction that include: age (only recent, non duplicate transactions are valid), ED25519 signature, structure (e.g. 1238 byte limit), transaction fee, and more.

Program bytecode is loaded from storage based on the program address specified in the transaction, and the sBPF virtual machine is provisioned to execute the instructions in the transaction (sBPF is one component of the Solana Virtual Machine (SVM).

Accounts referenced by the transaction are checked, loaded from storage into memory, and passed to the SVM.

Program bytecode is executed.

Modified accounts are synced back into storage (AccountsDB) in their updated state.

Additionally, full nodes perform higher-level validity checks related to PoH, leader schedule, various block/entry-level constraints, cryptographic commitments (e.g. BankHash), etc. that are part of a broader ruleset known as Solana’s State Transition Function (STF). We will cover Solana’s STF in a little bit more detail below, but even more so in a future article.

Step 5 - Repeat: Once a full node is caught up to tip, it continuously performs Steps 2 through 4 to verify the chain as new blocks are continuously created by leader validators.

Important Side Note: The steps above are conceptual and more relevant to simple full node verification. What Solana validator and RPC nodes actually do is execute first and maintain two states (user account state and tower state). As execution proceeds, tower state is updated and is used to determine which forks to keep and which of those to prune. This is important when considering certain tradeoffs that can be made to reduce full node resource requirements and we’ll revisit this later. For now though, the above can be thought of as a general model of how most blockchains work, which is to fetch blocks, verify consensus on them, and execute the transactions that they contain. This is referred to as optimistic execution because the block is executed immediately and voted on before achieving full consensus. The reason Solana can execute the block optimistically is because it leverages PoH for synchronization and fast block propagation (using Turbine) to greatly increase the probability that an executed block will be confirmed. TowerBFT is built to support switching forks in the case where the “optimism” is misplaced.

The state changes that occur from executing a block are not limited to those that result from executing transactions via the SVM (covered in Step 4), but are dictated by a wider ruleset that we’ll refer to as Solana’s State Transition Function (STF). The STF encodes the core Solana protocol and is independent of client implementation. Some of these STF components outside of the SVM include:

Block-level rules such as compute unit limits, block size, transaction ordering rules, cryptographic commitments (entry_hash, bank_hash), etc. which are verified in order to proceed with execution. This is critical because two identical SVM implementations can result in diverging state if one treats a block as valid, while another treats it as invalid.

Epoch-level changes that are also relevant to the verification of state transitions such as staking reward payouts, validator stakeweight changes, leader schedule, calculation of the Epoch Accounts Hash, etc.

The STF is crucial to consider because any alterations to it impact the set of potential state changes to the Solana blockchain and could lead to a hard fork. Changes to the STF are reviewed through Solana’s SIMD process, then enabled on Testnet via the feature gate activation process, and finally, after a period of testing and sometimes validator governance, activated on Mainnet Beta as well. Rent collection for example was a prior aspect of Solana’s STF that has been deprecated and will be removed, while another future change will likely be ApEX which would result in significant changes to the STF while leaving the SVM intact.

Because the formal definitions for the SVM and Solana’s STF haven’t been fully settled on yet, and for sake of time, we plan to discuss this in a future article.

Alternative Methods to Access Solana State

Users often choose the path with least friction for accessing on-chain information, such as checking their Solana wallet details and using Jupiter for token swaps, typically relying on third-party RPC providers. While efforts can be made to enhance the verification of this data, these improvements come with additional trust assumptions, which limit their security compared to a full node. For example:

Using a RPC service without running your own full node or light client (mentioned next) means that you need to trust the RPC provider. While the RPC provider has their own full node to query and verify information from the chain, users must depend on the provider to relay this information honestly. Censorship (i.e. jurisdiction based) is also another potential concern if dependent on RPC providers. This is by far the easiest and most common way that users access the chain on Solana and other blockchains, and is the default when using crypto wallets and dApps.

Using a SPV light client to verify state means that a user is trusting a committee of validators, the standard being a supermajority of validators by stake. This is better than purely using a RPC service, but still requires a significant trust assumption for block validity over that of a full node. To clarify this further, using a supermajority of validators for fork choice is acceptable, but utilizing it to attest to the state of accounts greatly reduces security and also adds an “incentive to corrupt” by giving additional power over to validators while removing it from the hands of the users. SPV light clients on Solana are currently under development by the Tinydancer team.

Using a trust-minimized light client (one built with either ZK or fraud proofs) means that you're adding an additional trust assumption in the form of the prover system, but this is the most secure of the three mentioned. Although trust-minimized light clients are certainly valuable and offer considerably stronger security guarantees than options 1 and 2, they are not completely trustless.

Validity light clients: While ZK protocols have strong guarantees, they still add an extra layer of implementation and cryptographic assumptions that present risk that is not inherent to a full node. Ultimately, trust minimized ZK light clients built on Solana are still a considerable time away from being implemented. There are also liveness risks associated with prover centralization.

Fraud provable light clients: These directly rely on full nodes to raise fraud proofs, and are thus complementary to and benefit from having a large number of full nodes. Note that you only need a single honest full node to raise the fraud proof, so having a large number of full nodes is akin to a large number of people watching the network to raise a proof.

Aside from verification of the chain, two other key benefits of running a full node include:

Eclipse resistance: running a full node contributes to the overall security of the chain by making the network mesh more robust.

Hard fork resistance: running a full node controls the code on their machine and cannot be hard forked against their will.

Eclipse Resistance

The security of a network is multi-faceted and many discussions touch on the value of economic security for increasing the difficulty threshold for safety and liveness concerns. Safety in Byzantine Fault Tolerance (BFT) consensus is violated when two honest nodes finalize different blocks at the same height. If a group of entities has acquired enough stake it is possible for them to:

Trigger a broad network fork if the entities hold more than 33% of the stake and are capable of partitioning the network.

Isolate a single node on a fork if the entities hold more than 67% of the stake and are capable of isolating the network of a single node.

Partitioning a network is a very difficult task as it involves ensuring one section of the network does not see traffic from another section. One thing that we know is that having a large network mesh enables easier detection of these malicious forks, decreasing the chances that one could ever be finalized.

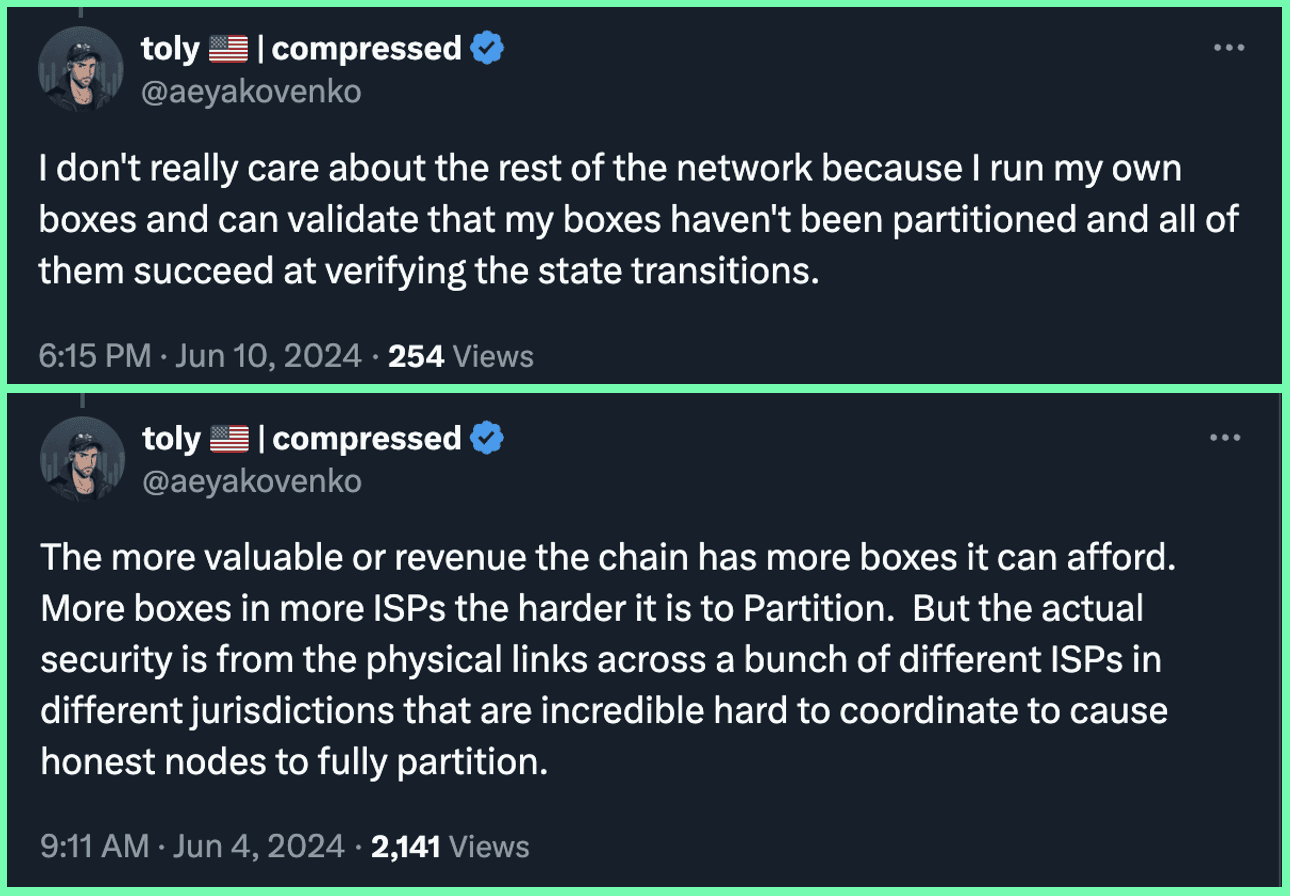

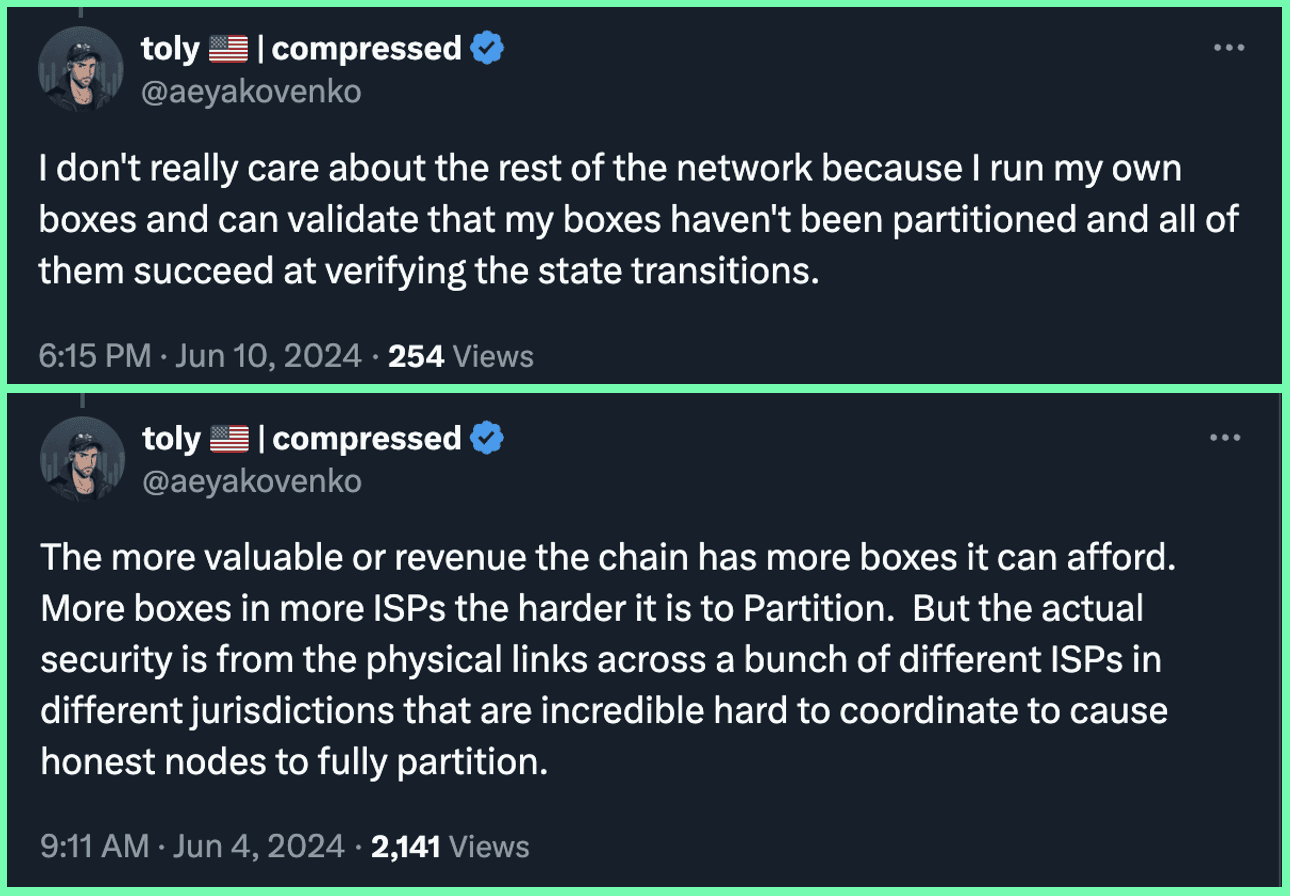

Isolating a single node is relatively easier than partitioning a large distributed network, but one can greatly minimize this attack vector by running multiple full nodes and monitoring whether any of them has forked from the others.

Operating a full node provides the most secure method of accessing a blockchain, enabling full observation and verification of the ongoing changes to the chain’s state as new blocks are added. Once a Solana full node client (i.e. Agave on Solana) is initialized on a server, verifying the chain proceeds as follows:

Step 1 - Initial Sync: A recent “snapshot” of Solana’s full state is downloaded from a “trusted” source on the network (e.g. RPCs, validators), which is then unpacked to populate the node's local view of this state into the AccountsDB. This state is in the form of “accounts” that contain data such as users’ token balances. Older snapshots can be downloaded from sources such as Triton’s project Yellow Stone, but syncing from a snapshot at any point in time after Solana’s Genesis carries additional trust assumptions (related context: “weak subjectivity”). While using a recent snapshot to join the network is technically less secure, running a recently synced full node is still the most significant step one can make to securely access Solana blockchain state at this point in time.

Step 2 - Fetch: Once Step 1 is completed and the node has uploaded recent state from a snapshot, it is typically lagging behind the leading edge of the network where new blocks are being produced by several minutes or longer. This leading edge is also known as the "tip" of the chain. To build its local copy of the ledger up to the tip of the chain, it begins fetching new blocks (in shred format) from other validators through Solana’s Repair Service and once closer to the tip, it starts receiving blocks (also shreds) from the network via Turbine. These shreds are received at the node’s Transaction Validation Unit port. In parallel to this block fetching process, the full node is busy verifying (aka “replaying”) these blocks in canonical order, cycling through Steps 3 and 4 where state will be progressed until it catches up to the tip of the chain. Note that for full nodes, blocks do not need to be from trusted sources since each node is capable of fully verifying blocks and executing them. This is because the block will simply be rejected by the full node client if the source decides to be malicious – all consensus and state transition rules are enforced by the full node client software (see Steps 3 and 4). A malicious supermajority of validators can forge non compliant transactions and blocks, and continue on with consensus, but a full node (including non-malicious, honest validators participating in consensus) will be able to detect these types of violations and halt. This is the core of what makes full nodes secure – a malicious source must break fundamental cryptographic security (and consensus trust assumptions) to trick a full node into accepting an invalid state transition or a double spend.

Step 3 - Consensus Verification: Once a full node has synced the initial account state by completing Step 1, it has information such as that epoch’s validator stake weights and leader schedule. Using this information, the node can determine how much stakeweight has voted on a block and if a specific block has achieved fork choice consensus, meaning that the block needs to have at least 67% of the network stake weight voting for its block hash. Valid blocks that go on to achieve a supermajority of consensus votes are said to be "confirmed" and later become “finalized.” This information can be obtained by scanning the contents of subsequent blocks, since Solana votes are notably included as transactions in blocks.

Step 4 - Verification and Execution: During the catch-up process mentioned in Step 2, transactions contained in blocks are validated and executed. Transactions direct state changes to occur and their validation involves performing several checks in accordance with the Solana protocol.

When a transaction is executed, its lifecycle is as follows:

Protocol compliance checks are performed on the transaction that include: age (only recent, non duplicate transactions are valid), ED25519 signature, structure (e.g. 1238 byte limit), transaction fee, and more.

Program bytecode is loaded from storage based on the program address specified in the transaction, and the sBPF virtual machine is provisioned to execute the instructions in the transaction (sBPF is one component of the Solana Virtual Machine (SVM).

Accounts referenced by the transaction are checked, loaded from storage into memory, and passed to the SVM.

Program bytecode is executed.

Modified accounts are synced back into storage (AccountsDB) in their updated state.

Additionally, full nodes perform higher-level validity checks related to PoH, leader schedule, various block/entry-level constraints, cryptographic commitments (e.g. BankHash), etc. that are part of a broader ruleset known as Solana’s State Transition Function (STF). We will cover Solana’s STF in a little bit more detail below, but even more so in a future article.

Step 5 - Repeat: Once a full node is caught up to tip, it continuously performs Steps 2 through 4 to verify the chain as new blocks are continuously created by leader validators.

Important Side Note: The steps above are conceptual and more relevant to simple full node verification. What Solana validator and RPC nodes actually do is execute first and maintain two states (user account state and tower state). As execution proceeds, tower state is updated and is used to determine which forks to keep and which of those to prune. This is important when considering certain tradeoffs that can be made to reduce full node resource requirements and we’ll revisit this later. For now though, the above can be thought of as a general model of how most blockchains work, which is to fetch blocks, verify consensus on them, and execute the transactions that they contain. This is referred to as optimistic execution because the block is executed immediately and voted on before achieving full consensus. The reason Solana can execute the block optimistically is because it leverages PoH for synchronization and fast block propagation (using Turbine) to greatly increase the probability that an executed block will be confirmed. TowerBFT is built to support switching forks in the case where the “optimism” is misplaced.

The state changes that occur from executing a block are not limited to those that result from executing transactions via the SVM (covered in Step 4), but are dictated by a wider ruleset that we’ll refer to as Solana’s State Transition Function (STF). The STF encodes the core Solana protocol and is independent of client implementation. Some of these STF components outside of the SVM include:

Block-level rules such as compute unit limits, block size, transaction ordering rules, cryptographic commitments (entry_hash, bank_hash), etc. which are verified in order to proceed with execution. This is critical because two identical SVM implementations can result in diverging state if one treats a block as valid, while another treats it as invalid.

Epoch-level changes that are also relevant to the verification of state transitions such as staking reward payouts, validator stakeweight changes, leader schedule, calculation of the Epoch Accounts Hash, etc.

The STF is crucial to consider because any alterations to it impact the set of potential state changes to the Solana blockchain and could lead to a hard fork. Changes to the STF are reviewed through Solana’s SIMD process, then enabled on Testnet via the feature gate activation process, and finally, after a period of testing and sometimes validator governance, activated on Mainnet Beta as well. Rent collection for example was a prior aspect of Solana’s STF that has been deprecated and will be removed, while another future change will likely be ApEX which would result in significant changes to the STF while leaving the SVM intact.

Because the formal definitions for the SVM and Solana’s STF haven’t been fully settled on yet, and for sake of time, we plan to discuss this in a future article.

Alternative Methods to Access Solana State

Users often choose the path with least friction for accessing on-chain information, such as checking their Solana wallet details and using Jupiter for token swaps, typically relying on third-party RPC providers. While efforts can be made to enhance the verification of this data, these improvements come with additional trust assumptions, which limit their security compared to a full node. For example:

Using a RPC service without running your own full node or light client (mentioned next) means that you need to trust the RPC provider. While the RPC provider has their own full node to query and verify information from the chain, users must depend on the provider to relay this information honestly. Censorship (i.e. jurisdiction based) is also another potential concern if dependent on RPC providers. This is by far the easiest and most common way that users access the chain on Solana and other blockchains, and is the default when using crypto wallets and dApps.

Using a SPV light client to verify state means that a user is trusting a committee of validators, the standard being a supermajority of validators by stake. This is better than purely using a RPC service, but still requires a significant trust assumption for block validity over that of a full node. To clarify this further, using a supermajority of validators for fork choice is acceptable, but utilizing it to attest to the state of accounts greatly reduces security and also adds an “incentive to corrupt” by giving additional power over to validators while removing it from the hands of the users. SPV light clients on Solana are currently under development by the Tinydancer team.

Using a trust-minimized light client (one built with either ZK or fraud proofs) means that you're adding an additional trust assumption in the form of the prover system, but this is the most secure of the three mentioned. Although trust-minimized light clients are certainly valuable and offer considerably stronger security guarantees than options 1 and 2, they are not completely trustless.

Validity light clients: While ZK protocols have strong guarantees, they still add an extra layer of implementation and cryptographic assumptions that present risk that is not inherent to a full node. Ultimately, trust minimized ZK light clients built on Solana are still a considerable time away from being implemented. There are also liveness risks associated with prover centralization.

Fraud provable light clients: These directly rely on full nodes to raise fraud proofs, and are thus complementary to and benefit from having a large number of full nodes. Note that you only need a single honest full node to raise the fraud proof, so having a large number of full nodes is akin to a large number of people watching the network to raise a proof.

Aside from verification of the chain, two other key benefits of running a full node include:

Eclipse resistance: running a full node contributes to the overall security of the chain by making the network mesh more robust.

Hard fork resistance: running a full node controls the code on their machine and cannot be hard forked against their will.

Eclipse Resistance

The security of a network is multi-faceted and many discussions touch on the value of economic security for increasing the difficulty threshold for safety and liveness concerns. Safety in Byzantine Fault Tolerance (BFT) consensus is violated when two honest nodes finalize different blocks at the same height. If a group of entities has acquired enough stake it is possible for them to:

Trigger a broad network fork if the entities hold more than 33% of the stake and are capable of partitioning the network.

Isolate a single node on a fork if the entities hold more than 67% of the stake and are capable of isolating the network of a single node.

Partitioning a network is a very difficult task as it involves ensuring one section of the network does not see traffic from another section. One thing that we know is that having a large network mesh enables easier detection of these malicious forks, decreasing the chances that one could ever be finalized.

Isolating a single node is relatively easier than partitioning a large distributed network, but one can greatly minimize this attack vector by running multiple full nodes and monitoring whether any of them has forked from the others.

While the likelihood of these attacks is exceedingly low, and one can always point to the relatively short history of blockchains and say that such attacks have never occurred (normalcy bias), it’s important to to minimize attack surfaces while balancing this effort with reasonable tradeoffs. Acceptable tradeoffs and mitigating protections are a major point of debate throughout the blockchain industry. Blockchains were conceived of to safeguard against attacks by state actors or other entities with substantial resources. A higher number of full nodes enhances this protection by making such attacks more challenging to execute and decreases reliance on economic security. One valid measure of decentralization is the cost to verify the chain, as lowering these barriers ideally enables greater full node adoption, improving the security of the network.

Hard Fork Resistance

This is related to personal verification of the chain and is worth mentioning because it brings up an important question: Who owns the network? What is the canonical state in a distributed system? If we say that the state is what 67% of the stake attests to, then we're essentially giving a supermajority of validators the power to hard fork the network. Users who don’t verify the chain on their own have no say in the consensus rules of a protocol because they're trusting another entity, and that entity can hardfork the chain against the user's will.

While this seems unlikely, Bitcoin’s Blocksize Wars are a good example of a situation where highly vested entities (miners and VCs) tried to change the rules of the protocol and attempted to push a hard fork to benefit themselves.

For further illustration of such a scenario, imagine Solana hard-forked into two separate chains – Solana Classic and Solana Moon – following a validator governance to increase Solana's inflation rate to compensate for low block rewards. Solana Moon is supported by larger entities associated with wallets, centralized exchanges, and RPC businesses – many run validators as a business and prefer the higher rewards that come with a higher inflation rate. Given their alignment, the major wallet providers and the RPCs they do business with only show users activity from Solana Moon in an attempt to increase its dominance. The Solana Classic supporters who lack their own full nodes end up defaulting over to the new chain. Some manage to rent their own datacenter servers to run full nodes however, and are able to regain access to their Solana Classic funds through custom RPC access points on certain wallets. Solana Classic continues to maintain some activity despite the efforts of major entities to support a different fork, and lives on to some extent. Though a scenario such as this is quite unlikely and rather simplistic, it should give you an understanding why running your own full node gives you more power as a network participant and why efforts to make it more practical to run a full node are valuable for decentralization.

While the likelihood of these attacks is exceedingly low, and one can always point to the relatively short history of blockchains and say that such attacks have never occurred (normalcy bias), it’s important to to minimize attack surfaces while balancing this effort with reasonable tradeoffs. Acceptable tradeoffs and mitigating protections are a major point of debate throughout the blockchain industry. Blockchains were conceived of to safeguard against attacks by state actors or other entities with substantial resources. A higher number of full nodes enhances this protection by making such attacks more challenging to execute and decreases reliance on economic security. One valid measure of decentralization is the cost to verify the chain, as lowering these barriers ideally enables greater full node adoption, improving the security of the network.

Hard Fork Resistance

This is related to personal verification of the chain and is worth mentioning because it brings up an important question: Who owns the network? What is the canonical state in a distributed system? If we say that the state is what 67% of the stake attests to, then we're essentially giving a supermajority of validators the power to hard fork the network. Users who don’t verify the chain on their own have no say in the consensus rules of a protocol because they're trusting another entity, and that entity can hardfork the chain against the user's will.

While this seems unlikely, Bitcoin’s Blocksize Wars are a good example of a situation where highly vested entities (miners and VCs) tried to change the rules of the protocol and attempted to push a hard fork to benefit themselves.

For further illustration of such a scenario, imagine Solana hard-forked into two separate chains – Solana Classic and Solana Moon – following a validator governance to increase Solana's inflation rate to compensate for low block rewards. Solana Moon is supported by larger entities associated with wallets, centralized exchanges, and RPC businesses – many run validators as a business and prefer the higher rewards that come with a higher inflation rate. Given their alignment, the major wallet providers and the RPCs they do business with only show users activity from Solana Moon in an attempt to increase its dominance. The Solana Classic supporters who lack their own full nodes end up defaulting over to the new chain. Some manage to rent their own datacenter servers to run full nodes however, and are able to regain access to their Solana Classic funds through custom RPC access points on certain wallets. Solana Classic continues to maintain some activity despite the efforts of major entities to support a different fork, and lives on to some extent. Though a scenario such as this is quite unlikely and rather simplistic, it should give you an understanding why running your own full node gives you more power as a network participant and why efforts to make it more practical to run a full node are valuable for decentralization.

Streamlining Full Node Verification

Streamlining Full Node Verification

Full nodes used for personal blockchain access and verification are uncommon on Solana, but we hope to make them more practical by lowering the cost and technical know-how required to run one with Mithril. A major reason for these higher barriers is that the standard node types on Solana, the validator node and RPC node (specialized subclasses of full nodes) have high-end hardware and networking requirements due to their specialized, low latency use cases:

A validator node:

Receives transactions (via QUIC in Solana), filters them (good sigs, fee, etc.), and packs them together to produce blocks during their “leader” slots. These are then distributed to the rest of the network’s validators as shreds through Turbine.

When not a leader, receives shreds which they reconstruct into transaction batches and execute. When the block is fully constructed and executed (aka “replayed”) the block can be voted on.

A RPC node:

Receives transaction requests from users via JSON RPC that will be forwarded to validators (when they are the block producing leader or a soon-to-be leader).

Serves JSON RPC queries (i.e. showing a user their wallet/account balances or simulating transactions) and maintains specialized indexes to make these queries more efficient. These indexes increase RAM requirements substantially.

There is a third node type which can be configured by passing certain flags to the validator (to not vote) and assigning it an identity without stake (so it doesn’t get on the leader schedule). There is no specific name for this node, so we’ll refer to it as an “Agave full node”.

Agave full node:

The Agave validator client can be run in a “non block producing / non voting mode” which prevents it from voting on consensus, producing blocks, or having any of the indices that a RPC node has. While this does lower the resource requirements, it’s still high (close enough to a validator) because it still executes blocks in real time optimistically, maintains multiple bank forks, and follows most of the code path that a regular validator does. Even if there are resource optimizations that could be made here (discussed in the later section), they would directly impact the validator functionality and would need to be in a separately maintained codebase.

As noted earlier, these high performance node types have higher resource requirements compared to what most users have access to from a home or an affordable cloud-hosted environment, which increases the technical and financial hurdles to run a node that verifies the chain. Below, we outline the sources of overhead that we plan to remove or minimize in Mithril in order to build a more minimal verifying full node, which include:

1. Optimistic Execution: Validators closely follow the tip of the chain and execute blocks even if consensus on those blocks has not yet been achieved. In order to perform this low latency, “optimistic” validation of blocks, they make use of Proof of History (PoH) for synchronization and TowerBFT for fork selection while executing transaction batches (smaller parts of a block) that have been streamed across the network by each leader validator through Turbine. Once validators process all of the transaction batches that compose a block and verify block validity, they can optimistically vote on the fork associated with it. Performant validators are fast enough to vote on processed blocks most of the time, and at this stage multiple forks may be active. Eventually, these forks converge and become confirmed after the invalid forks are pruned, but for the time that multiple early forks are active, it requires validators and RPC nodes to maintain multiple bank states in memory, increasing RAM requirements.

The state that ultimately matters for users though is confirmed state (blocks that have achieved supermajority stakeweight and to this point have not been rolled back on Solana) and blocks are typically confirmed within a few slots following their production by the leader.

For purposes of verification, Mithril can reduce computational overhead by inspecting the stakeweight of votes in incoming blocks and only executing blocks if they become confirmed. While ignoring processed-level transaction execution adds a slight delay by not being as close to the tip to verify freshly produced blocks, this intentional lag makes the verification process simpler and should theoretically reduce demands on memory and storage. This also simplifies the TowerBFT protocol implementation needed for Mithril.

2. Transaction Ingress: Both validators and RPCs serve as ingress points for transactions from users and need to have the hardware and networking capabilities to deal with large amounts of traffic in the form of high demand, “spam”, or even more serious distributed denial of service (DDOS) attacks.

For transaction ingress Solana uses Quic, which is a lightweight network protocol built on top of UDP. Solana originally used direct UDP transmission for transaction sending, but since UDP has no flow control, there was no process available for validators to slow the rate of transaction arrival as needed. Solana’s ability to mitigate spam significantly improved in 2022 with the addition of Quic as a network flow control layer, and the addition of economic backpressure in the form of priority fees. Quic adds to the system overhead for validators by introducing additional complexity. It currently includes TLS encryption (which is not strictly necessary), and lots of userspace code to track connections, streams and tables to track the allocation of streams to each sender by stakeweight. There is also overhead in managing, pruning, and allocating these new connections. Under high traffic conditions, this causes enough resource burden that even the data center machines that validators run on fall behind at times.

After passing through the network layer, every transaction that arrives at a validator’s Transaction Processing Unit (TPU) needs to pass through various filtering and ordering steps before it can be included in a block. These steps need to be done for a large number of transactions that directly hit the TPU port of a validator as well the TPU_FWD, which is where unprocessed transactions are forwarded from other validators. While processing one transaction might not be much, the high volume of transactions that validators need to deal with causes high strain on CPU, memory, and most importantly, networking. RPC nodes face similar challenges when receiving transaction query/simulation requests from users. Some of this load can be reduced if one chooses to add firewall rules to limit spam from certain sources, but the added strain will be higher than that of a personal verifying node. This is because a verifying node doesn’t need to spend resources filtering transactions other than those that are already on-chain in confirmed blocks.

Note that transaction ingress and forwarding can also be turned off on a regular validator node to convert it to an Agave full node, so this is not an exclusive benefit to Mithril. However, since the existing code base still loads these components, they create and occupy resources (e.g. channels, data structures, locks) even if they’re not being actively used.

3. PoH: A single core in validators is dedicated to grinding the Proof of History SHA256 hash sequence. This core is constantly grinding hashes, and is currently necessary as a synchronization mechanism for validators to determine when it’s their turn to produce blocks. Because Mithril doesn't produce blocks, this core can be freed up. While this is a single core and is not an issue for larger data center machines (for example, those that have 24 physical cores with higher clock speeds), this can be a concern for consumer nodes which have just 4-8 cores. Note that PoH verification is still done, but this isn’t a big issue since it's a parallelizable computation.

4. Latency Prioritization: Slots are only 400 ms and blocks are streamed in parallelizable batches of transactions to other validators through Turbine as they are produced by the leader validator. The default code prioritizes low latency by executing transaction batches as quickly as possible. This results in smaller batches being processed throughout the slot, rather than waiting until the end to try and execute a larger number of transactions in parallel.

One of the fundamental tradeoffs that Mithril is looking to leverage is that of latency over throughput – a few seconds of latency to replay larger batches can have resource amortization benefits (disk, cpu, bandwidth, etc.). We plan to make the degree of amortization configurable where feasible. For example, users can choose larger batch windows based on the bandwidth available and hardware specifications. Actual benchmarking would inform the best architectural tradeoffs and the batch replay strategy will derive inspiration from the Ethereum Go client Erigon's staged sync. This would primarily help with more bursty resource availability in consumer hardware and networking, where the general batch processing strategies that it employs could be used for more efficient catch up if a node falls behind the rest of the network.

5. Gossip: Gossip is currently a large source of network traffic. Broadly speaking, Solana’s network traffic can be divided into the “Data Plane” and the “Control Plane”. The Data Plane refers to Turbine and its primary purpose is to disseminate blocks across the network. The Control Plane refers to “Gossip” which is a peer to peer protocol to exchange node information (IP address, public key of a validator or full node, nodes serving snapshots, which ports does a machine have open, etc.). This information can change frequently, which means that nodes need to constantly communicate with one another. Additionally, Gossip is also used to exchange repair data as well in case the leader's block broadcast doesn’t reach all the nodes.

Completely disabling this can still allow Mithril to work, and operators should have this option, but nodes capable of serving confirmed blocks and other minimal information over Gossip does add to the network’s mesh security. Mithril will aim to separate and allow Gossip participation to be configurable, and we also plan to build alternative block distribution pathways that don’t burden the network as much. We are also hopeful that Solana’s Gossip bandwidth will see significant reductions in the future.

6. Indexes: These are used to improve data query efficiency for RPC services or high frequency trading, and result in Solana RPC nodes having higher RAM requirements than validators due to the need to store account indexes in RAM. It’s possible to disable them on an Agave node for more of a full node configuration, but it’s still relevant to mention because Solana RPC node requirements are often conflated with the minimum requirements for full nodes.

Full nodes used for personal blockchain access and verification are uncommon on Solana, but we hope to make them more practical by lowering the cost and technical know-how required to run one with Mithril. A major reason for these higher barriers is that the standard node types on Solana, the validator node and RPC node (specialized subclasses of full nodes) have high-end hardware and networking requirements due to their specialized, low latency use cases:

A validator node:

Receives transactions (via QUIC in Solana), filters them (good sigs, fee, etc.), and packs them together to produce blocks during their “leader” slots. These are then distributed to the rest of the network’s validators as shreds through Turbine.

When not a leader, receives shreds which they reconstruct into transaction batches and execute. When the block is fully constructed and executed (aka “replayed”) the block can be voted on.

A RPC node:

Receives transaction requests from users via JSON RPC that will be forwarded to validators (when they are the block producing leader or a soon-to-be leader).

Serves JSON RPC queries (i.e. showing a user their wallet/account balances or simulating transactions) and maintains specialized indexes to make these queries more efficient. These indexes increase RAM requirements substantially.

There is a third node type which can be configured by passing certain flags to the validator (to not vote) and assigning it an identity without stake (so it doesn’t get on the leader schedule). There is no specific name for this node, so we’ll refer to it as an “Agave full node”.

Agave full node:

The Agave validator client can be run in a “non block producing / non voting mode” which prevents it from voting on consensus, producing blocks, or having any of the indices that a RPC node has. While this does lower the resource requirements, it’s still high (close enough to a validator) because it still executes blocks in real time optimistically, maintains multiple bank forks, and follows most of the code path that a regular validator does. Even if there are resource optimizations that could be made here (discussed in the later section), they would directly impact the validator functionality and would need to be in a separately maintained codebase.

As noted earlier, these high performance node types have higher resource requirements compared to what most users have access to from a home or an affordable cloud-hosted environment, which increases the technical and financial hurdles to run a node that verifies the chain. Below, we outline the sources of overhead that we plan to remove or minimize in Mithril in order to build a more minimal verifying full node, which include:

1. Optimistic Execution: Validators closely follow the tip of the chain and execute blocks even if consensus on those blocks has not yet been achieved. In order to perform this low latency, “optimistic” validation of blocks, they make use of Proof of History (PoH) for synchronization and TowerBFT for fork selection while executing transaction batches (smaller parts of a block) that have been streamed across the network by each leader validator through Turbine. Once validators process all of the transaction batches that compose a block and verify block validity, they can optimistically vote on the fork associated with it. Performant validators are fast enough to vote on processed blocks most of the time, and at this stage multiple forks may be active. Eventually, these forks converge and become confirmed after the invalid forks are pruned, but for the time that multiple early forks are active, it requires validators and RPC nodes to maintain multiple bank states in memory, increasing RAM requirements.

The state that ultimately matters for users though is confirmed state (blocks that have achieved supermajority stakeweight and to this point have not been rolled back on Solana) and blocks are typically confirmed within a few slots following their production by the leader.

For purposes of verification, Mithril can reduce computational overhead by inspecting the stakeweight of votes in incoming blocks and only executing blocks if they become confirmed. While ignoring processed-level transaction execution adds a slight delay by not being as close to the tip to verify freshly produced blocks, this intentional lag makes the verification process simpler and should theoretically reduce demands on memory and storage. This also simplifies the TowerBFT protocol implementation needed for Mithril.

2. Transaction Ingress: Both validators and RPCs serve as ingress points for transactions from users and need to have the hardware and networking capabilities to deal with large amounts of traffic in the form of high demand, “spam”, or even more serious distributed denial of service (DDOS) attacks.

For transaction ingress Solana uses Quic, which is a lightweight network protocol built on top of UDP. Solana originally used direct UDP transmission for transaction sending, but since UDP has no flow control, there was no process available for validators to slow the rate of transaction arrival as needed. Solana’s ability to mitigate spam significantly improved in 2022 with the addition of Quic as a network flow control layer, and the addition of economic backpressure in the form of priority fees. Quic adds to the system overhead for validators by introducing additional complexity. It currently includes TLS encryption (which is not strictly necessary), and lots of userspace code to track connections, streams and tables to track the allocation of streams to each sender by stakeweight. There is also overhead in managing, pruning, and allocating these new connections. Under high traffic conditions, this causes enough resource burden that even the data center machines that validators run on fall behind at times.

After passing through the network layer, every transaction that arrives at a validator’s Transaction Processing Unit (TPU) needs to pass through various filtering and ordering steps before it can be included in a block. These steps need to be done for a large number of transactions that directly hit the TPU port of a validator as well the TPU_FWD, which is where unprocessed transactions are forwarded from other validators. While processing one transaction might not be much, the high volume of transactions that validators need to deal with causes high strain on CPU, memory, and most importantly, networking. RPC nodes face similar challenges when receiving transaction query/simulation requests from users. Some of this load can be reduced if one chooses to add firewall rules to limit spam from certain sources, but the added strain will be higher than that of a personal verifying node. This is because a verifying node doesn’t need to spend resources filtering transactions other than those that are already on-chain in confirmed blocks.

Note that transaction ingress and forwarding can also be turned off on a regular validator node to convert it to an Agave full node, so this is not an exclusive benefit to Mithril. However, since the existing code base still loads these components, they create and occupy resources (e.g. channels, data structures, locks) even if they’re not being actively used.

3. PoH: A single core in validators is dedicated to grinding the Proof of History SHA256 hash sequence. This core is constantly grinding hashes, and is currently necessary as a synchronization mechanism for validators to determine when it’s their turn to produce blocks. Because Mithril doesn't produce blocks, this core can be freed up. While this is a single core and is not an issue for larger data center machines (for example, those that have 24 physical cores with higher clock speeds), this can be a concern for consumer nodes which have just 4-8 cores. Note that PoH verification is still done, but this isn’t a big issue since it's a parallelizable computation.

4. Latency Prioritization: Slots are only 400 ms and blocks are streamed in parallelizable batches of transactions to other validators through Turbine as they are produced by the leader validator. The default code prioritizes low latency by executing transaction batches as quickly as possible. This results in smaller batches being processed throughout the slot, rather than waiting until the end to try and execute a larger number of transactions in parallel.

One of the fundamental tradeoffs that Mithril is looking to leverage is that of latency over throughput – a few seconds of latency to replay larger batches can have resource amortization benefits (disk, cpu, bandwidth, etc.). We plan to make the degree of amortization configurable where feasible. For example, users can choose larger batch windows based on the bandwidth available and hardware specifications. Actual benchmarking would inform the best architectural tradeoffs and the batch replay strategy will derive inspiration from the Ethereum Go client Erigon's staged sync. This would primarily help with more bursty resource availability in consumer hardware and networking, where the general batch processing strategies that it employs could be used for more efficient catch up if a node falls behind the rest of the network.

5. Gossip: Gossip is currently a large source of network traffic. Broadly speaking, Solana’s network traffic can be divided into the “Data Plane” and the “Control Plane”. The Data Plane refers to Turbine and its primary purpose is to disseminate blocks across the network. The Control Plane refers to “Gossip” which is a peer to peer protocol to exchange node information (IP address, public key of a validator or full node, nodes serving snapshots, which ports does a machine have open, etc.). This information can change frequently, which means that nodes need to constantly communicate with one another. Additionally, Gossip is also used to exchange repair data as well in case the leader's block broadcast doesn’t reach all the nodes.

Completely disabling this can still allow Mithril to work, and operators should have this option, but nodes capable of serving confirmed blocks and other minimal information over Gossip does add to the network’s mesh security. Mithril will aim to separate and allow Gossip participation to be configurable, and we also plan to build alternative block distribution pathways that don’t burden the network as much. We are also hopeful that Solana’s Gossip bandwidth will see significant reductions in the future.

6. Indexes: These are used to improve data query efficiency for RPC services or high frequency trading, and result in Solana RPC nodes having higher RAM requirements than validators due to the need to store account indexes in RAM. It’s possible to disable them on an Agave node for more of a full node configuration, but it’s still relevant to mention because Solana RPC node requirements are often conflated with the minimum requirements for full nodes.

Improving Solana’s Comprehensibility

Improving Solana’s Comprehensibility

Now that you have a better understanding of how the architecture of a verifying full node can become more streamlined, we’d like to share more about our approach to lowering the barriers for developers and researchers to grasp the inner workings of Solana’s state transition function, including the SVM, before going into Mithril’s roadmap and specific implementation details. Two overarching implementation choices we are making for Mithril, which we elaborate on below, are:

A simplified, modular full node client codebase, building on the ideas discussed in the previous section, will serve as a more accessible STF prototype.

A simplified, readable programming language, Go, supports our mission to make the Solana core protocol more comprehendible.

A Minimal Solana STF implementation

The current primary validator client, Agave, is a tightly coupled and complex codebase making it tough to run a minimal full node without significant "untangling" of the needed components and cleanly breaking the dependencies. One primary reason for this is that, as stated, the validator clients do a lot more than just the minimal STF – among other functions, they process incoming transactions, maintain multiple banks, versioning of accounts, and participate in consensus.

A second reason for this however is historical. Solana went live in March 2020, and since then has undergone significant changes where bugs were fixed, new features were added and there were also significant re-designs. Modifying production code is akin to changing wheels on a moving car, so the highest priority is ensuring safety, especially considering the speed at which Solana ships features. This means that while there might be some benefits to significantly refactoring the code, those are minor compared to the risks that come with large rewrites. As such the code base has evolved over the years and carries some amount of baggage with it. This is not a criticism in any way, but just a reality of maintaining production code.

This was one of the reasons that motivated the decision to build out a full node client largely from scratch, so that we could include only the core components of a minimal STF. Doing so ensures that the implementation is concise, readable and also enables us to focus on making tradeoffs to prioritize readability and the minimal feature set needed to run on consumer-grade hardware.

Having a simple reference implementation for Solana’s STF also helps serve as an entrypoint before digging into the more complicated codebases of Agave and Firedancer which are optimized for performance, and thus smoothes the path for developers and researchers to build a deeper understanding of the core Solana protocol. The Sig validator client team is also seeking to improve comprehension of Solana’s core architecture by working on a well documented, concise validator client implementation in the Zig language.

Many specialized use cases for blockchain state and the state transition function currently require external plugins that make use of the Geyser interface (which makes use of the Rust ABI which is notoriously unstable). Having Solana’s STF isolated in a module can enable building tools that are more tightly integrated – for example analytics tools, explorers, games and other apps that require off chain execution hooks can directly be written in Golang. A simple reference implementation enables the ecosystem to fork and modify it as needed with native code instead of relying on data streamed from Geyser to perform a task such as writing a plugin to fire an event or update a postgres table after each block. Triton has already used Mithril’s upstream fork, Radiance, in the development of tools for their Old Faithful project, which stores historical Solana ledger data on the Filecoin network.

Why Go?

1. Novel: Validator clients were already in active development in Rust, C, and Zig. The first alternative client effort to the original Solana Labs client was actually Radiance, which was being developed in Go even before Firedancer had begun. Radiance’s early development had paused, however, because the primary developer, Richard Patel, shifted to working on Firedancer, so it was a good spot from which to continue with his blessing.

2. Simplicity: One of Go's language design goals is to be syntactically simple. Because Mithril also aims to document the core protocol, a simple language was preferable. Go can be verbose, but is easily understood by anyone with basic programming knowledge whereas languages like Rust require understanding of more nuanced features such as ownership, lifetimes, memory management and strong typing / abstractions. We intend to make the Mithril codebase well structured, modular, and reusable in nature. These factors, combined with Go’s simplicity will allow the codebase to serve as a reference and research implementation that is accessible to developers and researchers with a range of skill and experience levels, looking to understand how the Solana network operates.

3. Simple and Robust Toolchain: Go doesn't have any inherent external dependencies (i.e. libc), which makes cross compilation and execution on different platforms easy and build times very fast. We intend to make deployment of a Mithril full node trivial (i.e. runnable with a single build command, with no special configuration necessary to do so). The most secure method to run Mithril will be building from source which is relatively simple and automated in Go, but users will also have the option to download pre-built verifiable binaries.

4. Performant: Go is a low level language with a good default concurrency model that enables writing code that meets Mithril's performance requirements without being too complicated. While typically not allowing as much fine tuning as Rust, C, or Zig would, it can still provide comparable performance and has reasonable defaults.

5. Familiarity: Go is a language that is popular inside and outside of the blockchain community. Go was originally developed by Google and is commonly used in DevOPs, Cloud Infrastructure, Networking, and Fintech applications. In the blockchain world, Go was the language of choice for the reference implementation of Ethereum (Go-Ethereum aka Geth), and much of the Cosmos ecosystem is also centered around Go. A client in Go will lower the barrier to understand and contribute to the Solana core protocol for devs and researchers from those ecosystems.

Now that you have a better understanding of how the architecture of a verifying full node can become more streamlined, we’d like to share more about our approach to lowering the barriers for developers and researchers to grasp the inner workings of Solana’s state transition function, including the SVM, before going into Mithril’s roadmap and specific implementation details. Two overarching implementation choices we are making for Mithril, which we elaborate on below, are:

A simplified, modular full node client codebase, building on the ideas discussed in the previous section, will serve as a more accessible STF prototype.

A simplified, readable programming language, Go, supports our mission to make the Solana core protocol more comprehendible.

A Minimal Solana STF implementation

The current primary validator client, Agave, is a tightly coupled and complex codebase making it tough to run a minimal full node without significant "untangling" of the needed components and cleanly breaking the dependencies. One primary reason for this is that, as stated, the validator clients do a lot more than just the minimal STF – among other functions, they process incoming transactions, maintain multiple banks, versioning of accounts, and participate in consensus.

A second reason for this however is historical. Solana went live in March 2020, and since then has undergone significant changes where bugs were fixed, new features were added and there were also significant re-designs. Modifying production code is akin to changing wheels on a moving car, so the highest priority is ensuring safety, especially considering the speed at which Solana ships features. This means that while there might be some benefits to significantly refactoring the code, those are minor compared to the risks that come with large rewrites. As such the code base has evolved over the years and carries some amount of baggage with it. This is not a criticism in any way, but just a reality of maintaining production code.

This was one of the reasons that motivated the decision to build out a full node client largely from scratch, so that we could include only the core components of a minimal STF. Doing so ensures that the implementation is concise, readable and also enables us to focus on making tradeoffs to prioritize readability and the minimal feature set needed to run on consumer-grade hardware.

Having a simple reference implementation for Solana’s STF also helps serve as an entrypoint before digging into the more complicated codebases of Agave and Firedancer which are optimized for performance, and thus smoothes the path for developers and researchers to build a deeper understanding of the core Solana protocol. The Sig validator client team is also seeking to improve comprehension of Solana’s core architecture by working on a well documented, concise validator client implementation in the Zig language.

Many specialized use cases for blockchain state and the state transition function currently require external plugins that make use of the Geyser interface (which makes use of the Rust ABI which is notoriously unstable). Having Solana’s STF isolated in a module can enable building tools that are more tightly integrated – for example analytics tools, explorers, games and other apps that require off chain execution hooks can directly be written in Golang. A simple reference implementation enables the ecosystem to fork and modify it as needed with native code instead of relying on data streamed from Geyser to perform a task such as writing a plugin to fire an event or update a postgres table after each block. Triton has already used Mithril’s upstream fork, Radiance, in the development of tools for their Old Faithful project, which stores historical Solana ledger data on the Filecoin network.

Why Go?

1. Novel: Validator clients were already in active development in Rust, C, and Zig. The first alternative client effort to the original Solana Labs client was actually Radiance, which was being developed in Go even before Firedancer had begun. Radiance’s early development had paused, however, because the primary developer, Richard Patel, shifted to working on Firedancer, so it was a good spot from which to continue with his blessing.

2. Simplicity: One of Go's language design goals is to be syntactically simple. Because Mithril also aims to document the core protocol, a simple language was preferable. Go can be verbose, but is easily understood by anyone with basic programming knowledge whereas languages like Rust require understanding of more nuanced features such as ownership, lifetimes, memory management and strong typing / abstractions. We intend to make the Mithril codebase well structured, modular, and reusable in nature. These factors, combined with Go’s simplicity will allow the codebase to serve as a reference and research implementation that is accessible to developers and researchers with a range of skill and experience levels, looking to understand how the Solana network operates.

3. Simple and Robust Toolchain: Go doesn't have any inherent external dependencies (i.e. libc), which makes cross compilation and execution on different platforms easy and build times very fast. We intend to make deployment of a Mithril full node trivial (i.e. runnable with a single build command, with no special configuration necessary to do so). The most secure method to run Mithril will be building from source which is relatively simple and automated in Go, but users will also have the option to download pre-built verifiable binaries.

4. Performant: Go is a low level language with a good default concurrency model that enables writing code that meets Mithril's performance requirements without being too complicated. While typically not allowing as much fine tuning as Rust, C, or Zig would, it can still provide comparable performance and has reasonable defaults.

5. Familiarity: Go is a language that is popular inside and outside of the blockchain community. Go was originally developed by Google and is commonly used in DevOPs, Cloud Infrastructure, Networking, and Fintech applications. In the blockchain world, Go was the language of choice for the reference implementation of Ethereum (Go-Ethereum aka Geth), and much of the Cosmos ecosystem is also centered around Go. A client in Go will lower the barrier to understand and contribute to the Solana core protocol for devs and researchers from those ecosystems.

Roadmap

Roadmap

Radiance, Mithril’s Predecessor

Mithril's development began with Radiance, a project founded and led by Richard Patel, with contributions from Leo. At the time of beginning work on Mithril, Radiance already featured Go implementations for several Solana components, including a Solana BPF virtual machine/interpreter, Gossip, Blockstore, and PoH verification. Radiance’s BPF VM was the first non-Solana Labs implementation of sBPF.

We gradually built our way up from Richard’s nicely written BPF VM into eventually having a fully testable execution environment inclusive of native programs, the full suite of syscalls, a runtime, and Cross-Program Invocation. Radiance provided an excellent foundation upon which to build.

Milestone 1 Completed

Beginning in February 2024, Overclock team member Shaun Colley forked Radiance to begin spearheading Mithril’s development. He recently completed Mithril’s first milestone, which was to develop a complete, working implementation of the Solana Virtual Machine (SVM) in Go. Although the agreed upon definition of SVM still varies, we use a broader definition here that encompasses all of the components necessary to execute individual Solana transactions.

In short, then, this milestone features all components necessary to execute Solana transactions, including:

sBPF (a very simple and elegant Go implementation borrowed from Radiance)

Solana Runtime

Cross Program Invocation (CPI)

Syscalls

Sysvars

Compute unit accounting

Account state management

And so on…

Solana’s Native Programs

System program

Vote program

BPF Loader program

Stake program

Address lookup table program

Config program

Pre-compiles

Ed25519

secp256k1

Test case coverage

Because the focus of Milestone 1 was to develop a working Solana execution layer, the majority of the work done in this milestone may be found in the sealevel package.

A considerable amount of time was spent on testing that Mithril “does the right thing” for conformance purposes. We integrated Mithril with the Firedancer’s test-vectors corpus where possible, such as in testing various of Mithril’s native programs. The relevant test harnesses may be found in the conformance package.

Many test cases were also written by hand to exercise code paths in native programs, syscalls, and CPI infrastructure, and these included both test code written in Go, and BPF programs to trigger relevant code paths. These tests are also located in the sealevel package, with their filenames ending in “_test.go”. These test cases are ultimately designed to demonstrate that Mithril’s native programs, CPI implementation, and syscalls execute as expected, and the expected account states follow as a result.

Future Milestones

Our next milestone will be focused on developing Mithril’s capacity to sync and replay blocks from the network. Although we’re relatively confident that Mithril’s SVM is conformant with Agave’s after developing broad test case coverage, replaying blocks will alert us to any additional edge cases or bugs. Full block replay will also require conformance with Solana’s broader State Transition Function. For this milestone we will need the following:

Snapshot syncing and decoding

Batched replay for catchup after initializing state with a snapshot

Blockstore (exists in Radiance already)

AccountsDB

STF rules (Block and Epoch-level)

A simple RPC interface with simple custom RPC index

For our final milestone, we will focus on intensive system-wide optimization, experimenting with a staged sync batch processing model similar to Erigon. We will also test various block replay algorithms, such as BlockSTM, to optimize replay speed of confirmed blocks. Additionally, we hope to minimize reliance on snapshots even if Mithril nodes fall behind very far, leveraging batch processing techniques to improve the speed at which nodes can catch-up. Notably, GPUs may be very useful here given that bulk signature verification is highly efficient on them. While initially expected to play a key role in Solana validator performance, CPUs have proven to be much more efficient for signature verification on production validators during real time block processing, since the current transaction load is not yet high enough to see performance gains on GPUs. Outside of performance tuning, we will ensure that Mithril can run smoothly on various operating systems and be distributed as a verifiable binary for users who find building from source is inconvenient.

Expected Hardware Requirements

Regarding target hardware requirements, we aren’t at the stage yet to begin hardware benchmarks, but we strongly believe that it will be possible to run a Mithril full node on consumer hardware, given the fact that the RAM usage of our much more demanding Agave validator has dropped as low as ~35 GB RAM in the past year. We are hopeful that a dedicated full node setup with as low as 16 to 32 GB of RAM, 8 cores, and 1 TB of NVMe disk storage (for AccountsDB, Ledger, potential indexes, etc.) will be feasible, such as shown here for around $400, but exact needs are still TBD and could be higher or lower. Necessary hardware requirements will be assessed in our final milestone and we hope to involve the Solana community in these efforts when the time comes.

Expected Bandwidth Targets

We expect networking requirements to be the most challenging hurdle to verifying Solana from home. Confirmed blocks themselves do not take up much data; Solana transaction packets are a maximum size of 1280 bytes and most transactions do not use close to the full transaction data size. Vote transactions in particular, which take up a significant portion of blocks in terms of number currently, only use 319 bytes. Our most recent check of block sizes through the getConfirmedBlock RPC method (with curl –compressed) returned blocks that were roughly 1 to 1.3 MB.

For 10k transactions per second, we can thus estimate a very conservative bandwidth usage of ~100 Mbit/sec (10k transactions × (1232 bytes ÷ 1,000,000 bytes/MB)× 8 Mbit/MB) in steady state when requesting blocks through RPC calls or Geyser (with compression) and double to triple that bandwidth if receiving them through Turbine in erasure coded shred format. The caveat here is that when one needs to start from a snapshot, the initial amount of bandwidth required for the period of catch up will be higher since both older and newer blocks need to be downloaded.